07. Tuning Parameters Manually

Tuning Parameters

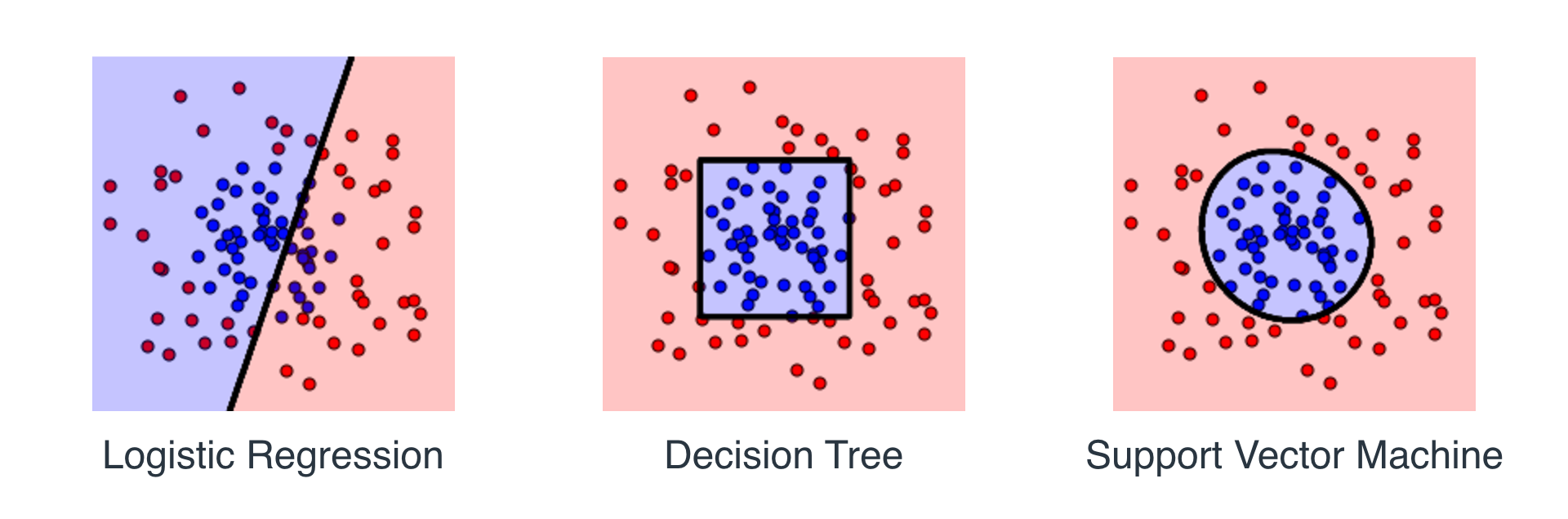

So it looks like 2 out of 3 algorithms worked well last time, right? This are the graphs you probably got:

It seems that Logistic Regression didn't do so well, as it's a linear algorithm. Decision Trees managed to bound the data well (question: Why does the area bounded by a decision tree look like that?), and the SVM also did pretty well. Now, let's try a slightly harder dataset, as follows:

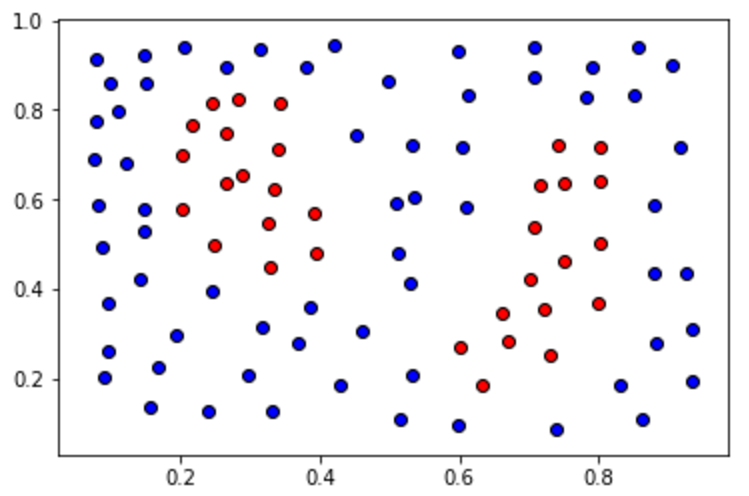

Let's try to fit this data with an SVM Classifier, as follows:

>>> classifier = SVC()

>>> classifier.fit(X,y)If we do this, it will fail (you'll have the chance to try below). However, it seems that maybe we're not exploring all the power of an SVM Classifier. For starters, are we using the right kernel? We can use, for example, a polynomial kernel of degree 2, as follows:

>>> classifier = SVC(kernel = 'poly', degree = 2)Let's try it ourselves, let's play with some of these parameters. We'll learn more about these later, but here are some values you can play with. (For now, we can use them as a black box, but they'll be discussed in detail during the Supervised Learning Section of this nanodegree.)

- kernel (string): 'linear', 'poly', 'rbf'.

- degree (integer): This is the degree of the polynomial kernel, if that's the kernel you picked (goes with poly kernel).

- gamma (float): The gamma parameter (goes with rbf kernel).

- C (float): The C parameter.

In the quiz below, you can play with these parameters. Try to tune them in such a way that they bound the desired area! In order to see the boundaries that your model created, click on Test Run.

Note: The quiz is not graded. But if you want to see a solution that works, look at the solutions.py tab. The point of this quiz is not to learn about the parameters, but to see that in general, it's not easy to tune them manually. Soon we'll learn some methods to tune them automatically in order to train better models.

Start Quiz: